Google Search Console - Fix gray index - WordPress robots.txt

You work hard on your website, write great content and optimize for search engines by every trick in the book. But you’re still not ranking as well as you’d hoped? The cause could be the “gray index” of the Google Search Console. Let’s optimize indexing together by cleaning up your index!

To fix the gray index in the Google Search Console for WordPress, adjust your robots.txt. This adjustment helps to prevent unnecessary indexing and optimizes the visibility of your website in search results.

In this article, I’ll show you how to fix the gray index in Google Search Console with a few simple steps. Use my optimized robots.txt file to solve many problems in one go.

What is the “gray index”?

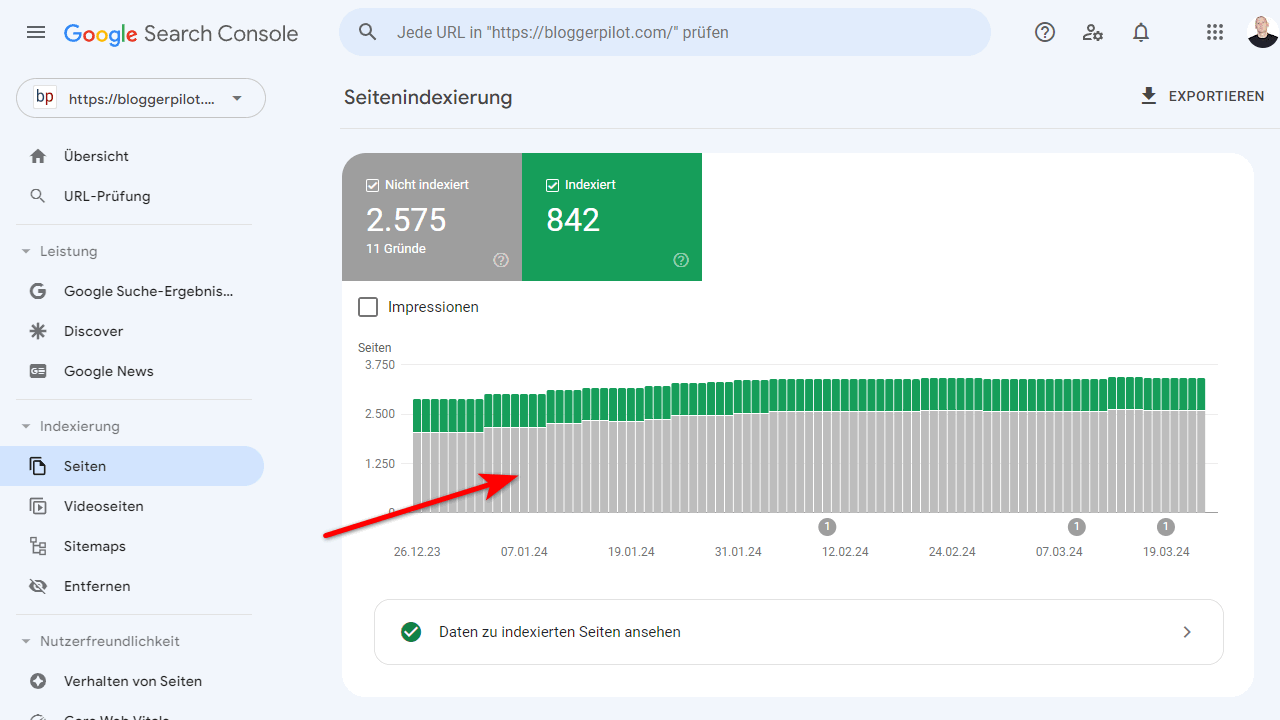

In the Search Console, under Indexing > Pages, you can see which of your websites have been indexed by Google. The indexed pages are listed in green. But there is also a gray area: This is the“gray index” with all pages that Google has crawled but does not show in the search results.

Why does Google not index some pages? There can be many reasons for this – technical errors, duplicate content, incorrect tags and more. But without indexing, you have no chance of rankings and SEO traffic. That’s why it’s essential that you regularly check and clean up the gray index.

I admit that I didn’t pay attention to the gray index at first. But when I saw customer websites with hundreds or even thousands of non-indexed URLs, I realized how important this maintenance is. Especially with large sites, the loss of traffic due to non-indexing can otherwise be huge.

So get to work on the gray index! I’ll now show you the most common problems and how you can fix them.

A clean robots.txt prevents unnecessary indexing

If you would rather not read the whole article and are looking for a solution right away, I’ve started with robots.txt, which you can copy and paste into your site.

A good place to start is to specify in your robots.txt file which areas of your website should be crawled. Here is my recommendation for a standard WordPress installation:

User-agent: *

Disallow: /cgi-bin/

Disallow: /wp-admin/

Disallow: /wp-includes/

Disallow: /wp-login.php

Disallow: /wp-content/plugins/

Disallow: /wp-content/cache/

Disallow: /wp-content/themes/

Disallow: */page/

Disallow: /*?

Disallow: /?s=

Allow: /wp-admin/admin-ajax.php

Sitemap: https://your-domain.com/sitemap.xml

With the disallow rules, you exclude all system and plugin directories that are irrelevant for the user. You also block URLs with question mark parameters and the search page. The Allow rule is essential so that WordPress Ajax functions can still be accessed.

Also enter the URL of your XML sitemap at the end so that Google can find it easily.

This limits the crawl area to the bare minimum and prevents unnecessary indexing.

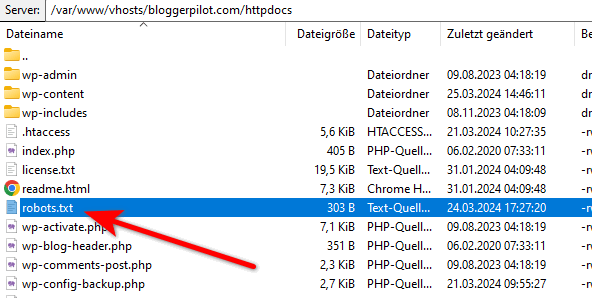

Copy the robots.txt file into the root directory of your WordPress installation. This is where the index.php and the wp-content directory are located. You only need to replace “your-domain.com” with your actual domain in the last line.

Manage pagination cleverly

Pagination or page numbering of categories, archives or search results is a common indexing problem. Google does not always understand that this is quasi-duplicated content.

Take, for example, a category overview with 200 articles on 20 subpages. Google could potentially take 20 similar pages into account in the rankings. This is usually not desirable, as these subpages offer little added value in terms of content.

So how can you prevent Google from indexing all subpages? There are three common solutions:

robots.txt – Exclude all paginations

If you don’t want to use a plugin, you can prohibit indexing via your robots.txt. The code for this can already be found in my perfect robots.txt for WordPress above.

Here are the separate lines again:

Disallow: */page/All URLs with “/page/” are therefore excluded.

Rank Math SEO Plugin

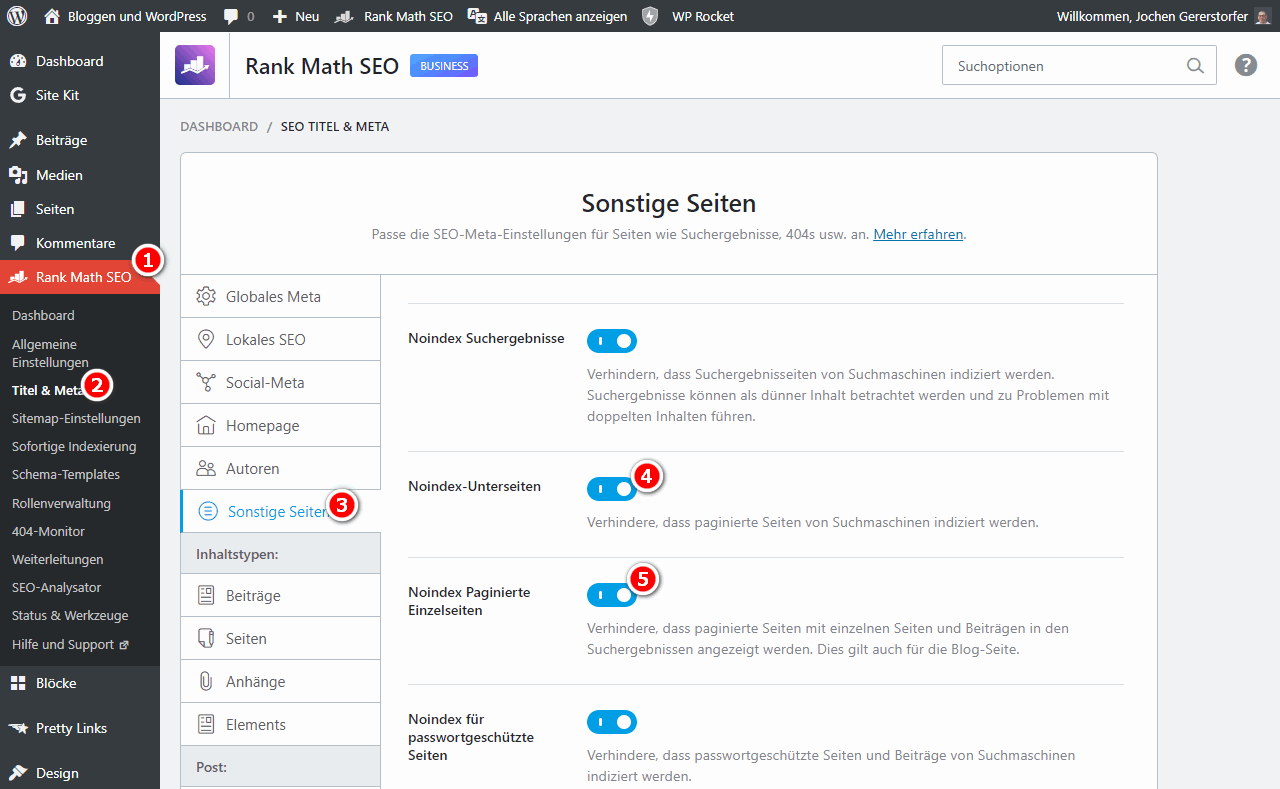

In Rank Math, the settings for indexing subpages can be found under “Title & Meta” > “Other pages”. With two clicks you have solved the problem.

Why is pagination optimization important? I’ve seen websites where Google has indexed thousands of these quasi-identical subpages. Not only an available crawl budget waste, this also reduces the link flow and visibility of the actual content pages.

Fix canonical tags, parameter URLs and content duplicates

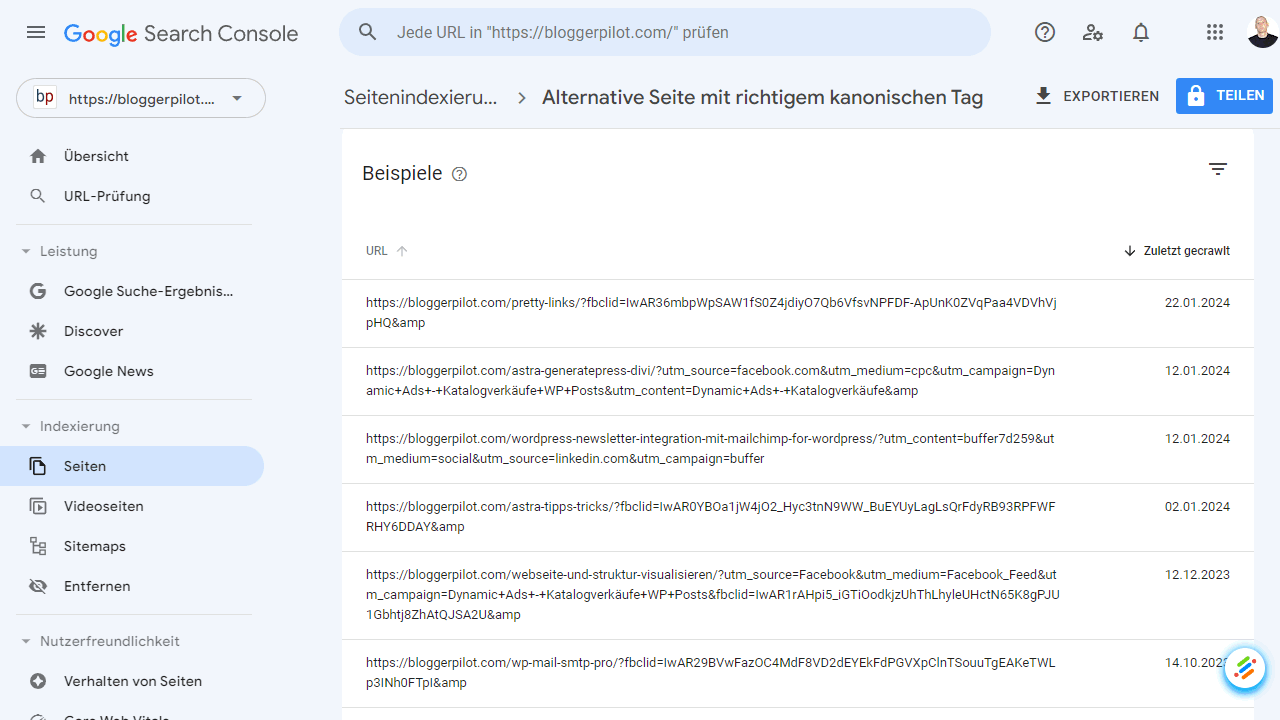

Google should be able to solve the problems with canonicals on its own. I am only concerned with URLs with parameters:

- ?amp

- ?fbclid

- ?utm_source

I have the following two instructions in robots.txt for this:

Disallow: /*?

Disallow: /?s=This tells the Google bot not to index URLs with parameters. This solves hundreds of errors in the Google Search Console all by itself.

Take a look at the gray index to see which pages are affected. Google will even tell you which other URL it has chosen – and you should make this the original.

Depending on the frequency and relevance of the error, there are various possible solutions:

I once had a blatant case where hundreds of URLs were ranking from a mirrored server subdomain instead of the actual website. Such a duplicate content bomb naturally kills the rankings. A nightmare! However, the problem was quickly solved with a few adjustments.

detect 404, soft 404, 403 and 401 errors

Error pages also like to end up in the gray index. 404 errors occur when pages no longer exist. They should be harmless as long as they are not mass errors on important pages.

However, you have to be careful with soft 404 errors: Here, contentless pages are delivered with 200 OK statuses instead of the correct 404, which can waste crawl budget and inflate the index unnecessarily.

401 means “Unauthorized”, often for login forms or administration areas. They are actually harmless, but should be avoided if possible. I have solved this problem with the directive Disallow: /wp-login.php.

403 is usually also triggered by wp-login.php. Use my robots.txt to solve the problem.

In the grey index you can see all these error URLs at a glance. Then it’s up to you to find and fix the underlying causes.

Google currently seems to have a problem with 404 errors in particular. I get countless 404s from subpages that never existed.

check 500 server errors and server logs

While the 400 errors tend not to be critical, you definitely need to investigate 500 server errors more closely!

These error codes indicate that the website has problems and cannot deliver content correctly. This not only leads to poor indexing, but also has a huge impact on the user experience.

Therefore, regularly check whether errors of this type appear in the gray index. At the same time, you should check the server logs to find the exact causes of errors such as software conflicts, memory leaks, etc.

In many cases, the server does not have enough resources at the time of delivery and therefore runs into an error. Here are the three most common problem-solving approaches:

- You need a larger hosting package

- Increase the PHP memory_limit (contact your provider)

- Use a caching plugin

Conclusion: Regular index maintenance for better SEO

When I started to regularly check the gray index of my websites, many new optimization possibilities opened up to me. If you keep your index clean by preventing unwanted indexing and eliminating errors, you will achieve.

- Focused crawls without unnecessary waste of resources

- Optimal link flow to the important content pages

- Less content cannibalization and duplicate content problems

- Better visibility and rankings in the search results

In other words: A tidy gray index is the key to high-performance SEO! I therefore recommend that you monitor your indexing in the Search Console on a weekly basis.

The more often you check the status quo, the faster you will identify and fix problems. Optimize your page indexing systematically and consistently – and enjoy the fruits of your labor in the form of more qualified visitors!

👉 This is what happens next

- Implement the recommended changes to your robots.txt and monitor the improvements in Google Search Console

- Expand your SEO knowledge with our guide to SEO factors to delve even deeper into the subject.

- Learn more about effective content marketing and how to write articles that rank by reading our blog post on AI article writing.